Wolfram Neural Net Repository

Immediate Computable Access to Neural Net Models

Identify the action in a video

Released in 2019, this family of nets consists of three-dimensional (3D) versions of the original MobileNet V1 architecture for video classification. Using a combination of depthwise separable convolutions and 3D convolutions, these light and efficient models achieve much better video classification accuracies compared to their two-dimensional counterparts.

Number of models: 8

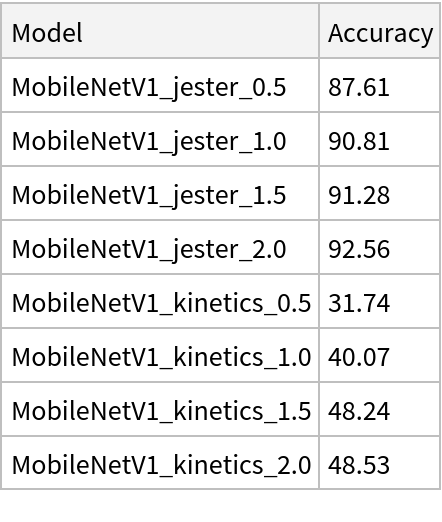

The models achieve the following accuracies on the original ImageNet validation set.

Get the pre-trained net:

| In[1]:= |

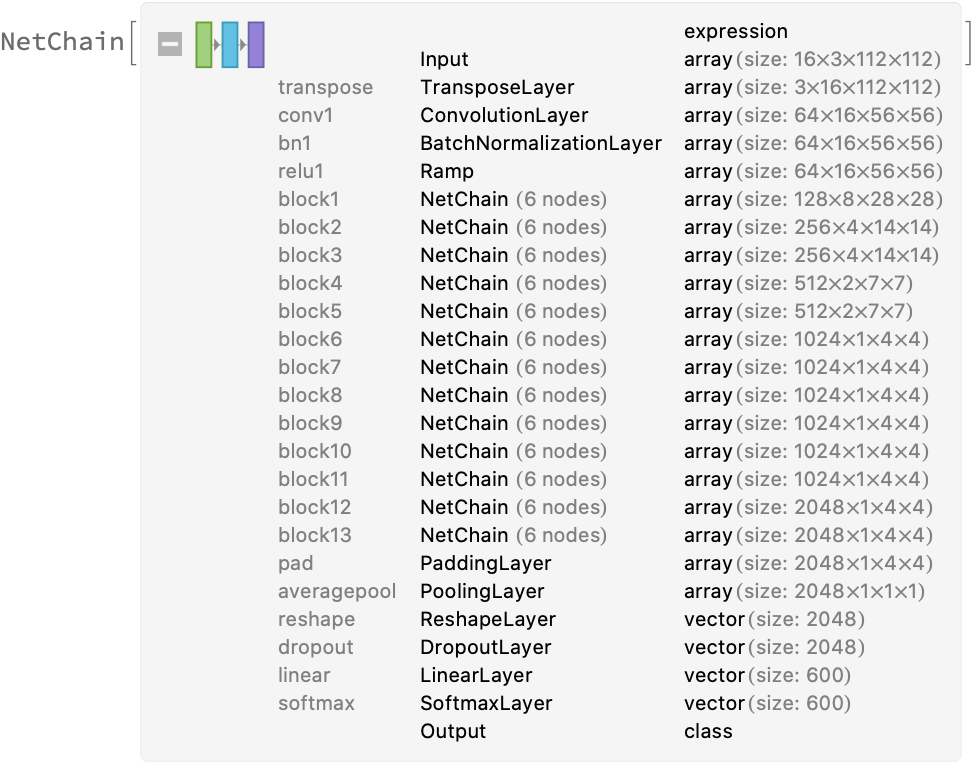

| Out[1]= |  |

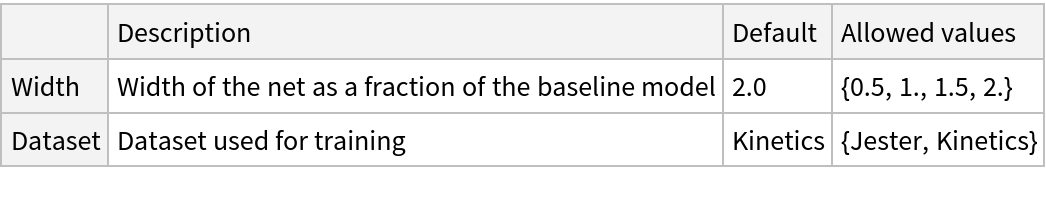

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

| In[2]:= |

| Out[2]= |  |

Pick a non-default net by specifying the parameters:

| In[3]:= |

| Out[3]= |

Pick a non-default uninitialized net:

| In[4]:= |

| Out[4]= |

Identify the main action in a video:

| In[5]:= |

| In[6]:= |

| Out[6]= |

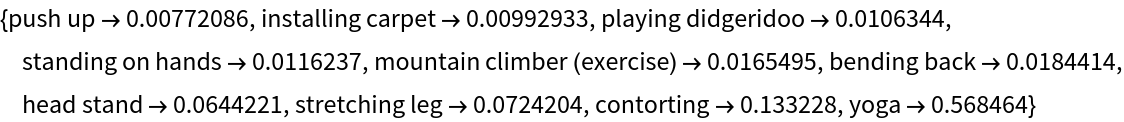

Obtain the probabilities of the 10 most likely entities predicted by the net:

| In[7]:= |

| Out[7]= |  |

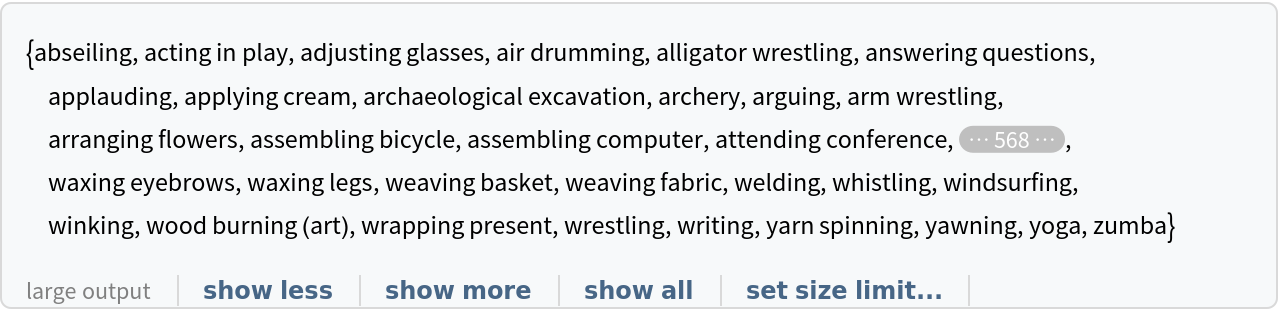

Obtain the list of names of all available classes:

| In[8]:= |

| Out[8]= |  |

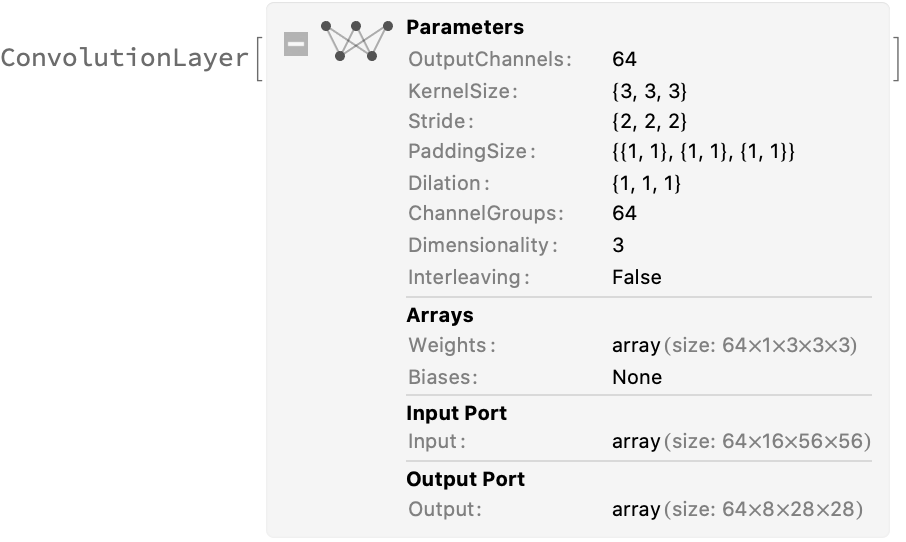

MobileNet-3D V1 is characterized by depthwise separable convolutions. The full convolutional operator is split into two layers. The first layer is called a depthwise convolution, which performs lightweight filtering by applying a single convolutional filter per input channel. This is realized by having a "ChannelGroups" setting equal to the number of input channels:

| In[9]:= |

| Out[9]= |  |

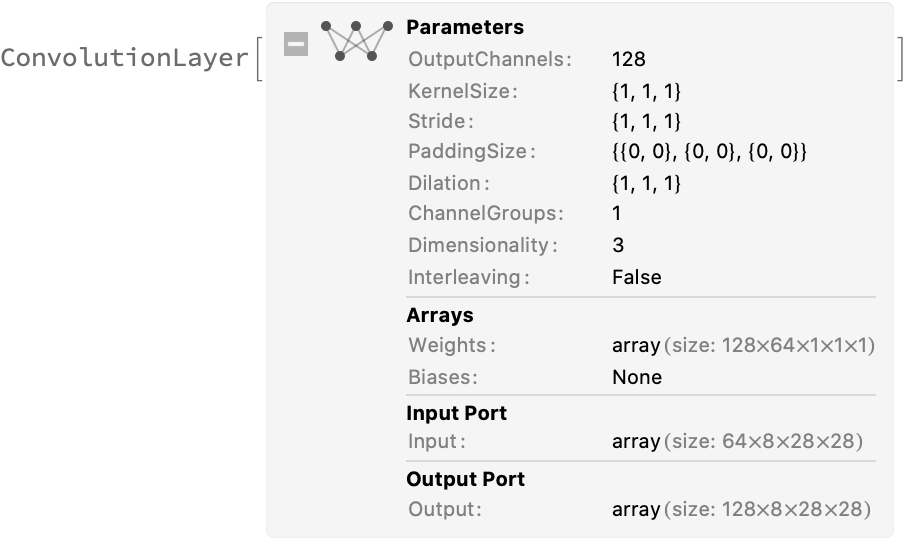

The second layer is a 1⨯1⨯1 convolution called a pointwise convolution, which is responsible for building new features through computing linear combinations of the input channels:

| In[10]:= |

| Out[10]= |  |

Remove the last layers of the trained net so that the net produces a vector representation of an image:

| In[11]:= |

| Out[11]= |

Get a set of videos:

| In[12]:= |

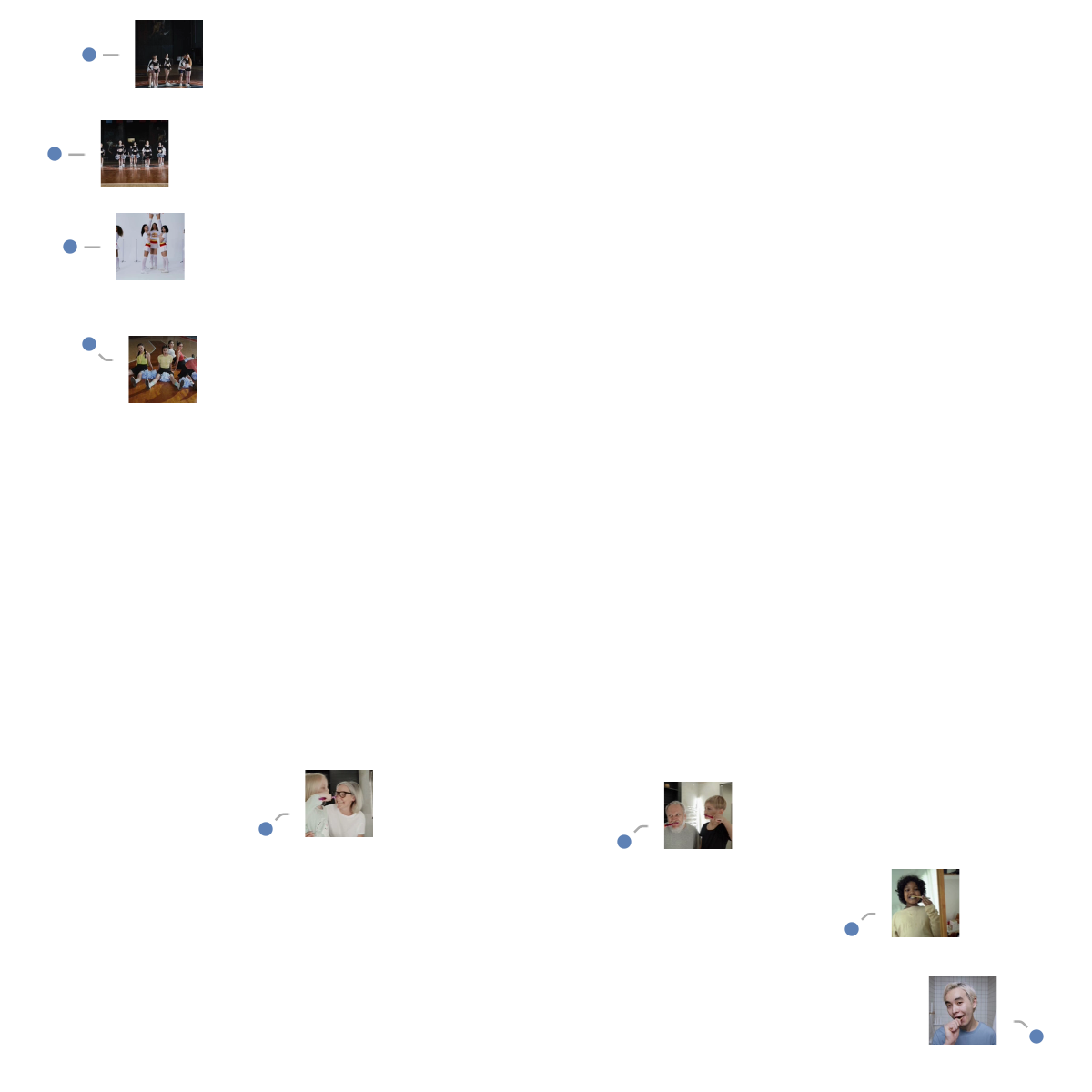

Visualize the features of a set of videos:

| In[13]:= | ![Copy to Clipboard FeatureSpacePlot[videos, FeatureExtractor -> extractor, LabelingFunction -> (Callout[

Thumbnail@VideoExtractFrames[#1, Quantity[1, "Frames"]]] &), LabelingSize -> 50, ImageSize -> 600]](https://www.wolframcloud.com/obj/resourcesystem/images/e38/e38af8c7-d3c7-4565-999b-c73d447a4039/531f1413961486d9.png) |

| Out[13]= |  |

Use the pre-trained model to build a classifier for telling apart images from two action classes not present in the dataset. Create a test set and a training set:

| In[14]:= |

| In[15]:= | ![Copy to Clipboard dataset = Join @@ KeyValueMap[

Thread[

VideoSplit[#1, Most@Table[

Quantity[i, "Frames"], {i, 16, Information[#1, "FrameCount"][[1]], 16}]] -> #2] &,

videos

];](https://www.wolframcloud.com/obj/resourcesystem/images/e38/e38af8c7-d3c7-4565-999b-c73d447a4039/2a7d369c357c1c95.png) |

| In[16]:= |

Remove the last layers from the pre-trained net:

| In[17]:= |

| Out[17]= |

Create a new net composed of the pre-trained net followed by a linear layer and a softmax layer:

| In[18]:= |

| Out[18]= |

Train on the dataset, freezing all the weights except for those in the "Linear" new layer (use TargetDevice -> "GPU" for training on a GPU):

| In[19]:= |

| Out[19]= |

Perfect accuracy is obtained on the test set:

| In[20]:= |

| Out[20]= |

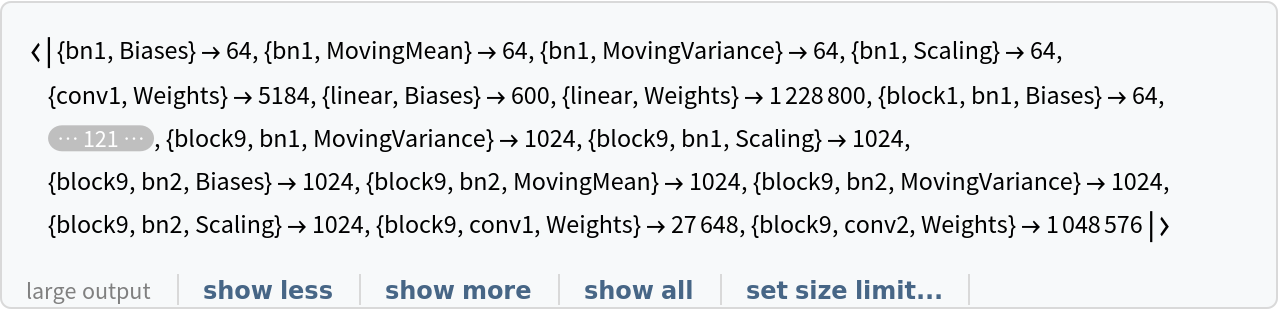

Inspect the number of parameters of all arrays in the net:

| In[21]:= |

| Out[21]= |  |

Obtain the total number of parameters:

| In[22]:= |

| Out[22]= |

Obtain the layer type counts:

| In[23]:= |

| Out[23]= |

Display the summary graphic:

| In[24]:= |

| Out[24]= |

Export the net to the ONNX format:

| In[25]:= |

| Out[26]= |

Get the size of the ONNX file:

| In[27]:= |

| Out[27]= |

Check some metadata of the ONNX model:

| In[28]:= |

| Out[28]= |

Import the model back into the Wolfram Language. However, the NetEncoder and NetDecoder will be absent because they are not supported by ONNX:

| In[29]:= |

| Out[29]= |