Incident Reporting System/zh: Difference between revisions

Updating to match new version of source page |

Cookai1205 (talk | contribs) No edit summary |

||

| Line 130: | Line 130: | ||

{{anchor|New!_21_September_2023_Update:_Sharing_incident_reporting_research_findings}} |

{{anchor|New!_21_September_2023_Update:_Sharing_incident_reporting_research_findings}} |

||

<span id="21_September_2023_Update:_Sharing_incident_reporting_research_findings"></span> |

<span id="21_September_2023_Update:_Sharing_incident_reporting_research_findings"></span> |

||

| ⚫ | |||

<div class="mw-translate-fuzzy"> |

|||

| ⚫ | |||

</div> |

|||

[[File:Findings Report Incident Reporting 2023.pdf|thumb|<span lang="en" dir="ltr" class="mw-content-ltr">Research Findings Report on Incident Reporting 2023</span>|alt=|{{dirstart}}]] |

[[File:Findings Report Incident Reporting 2023.pdf|thumb|<span lang="en" dir="ltr" class="mw-content-ltr">Research Findings Report on Incident Reporting 2023</span>|alt=|{{dirstart}}]] |

||

Revision as of 18:30, 19 June 2024

關 於

維基

專 案 背景

透過 維基討論 頁 透過 通告 版 面 透過 電子 郵件透過 維基以外 的 私人 討論 頻 道 (Discord、IRC等 )

專 案 焦點

此專

產品 和 技術 更新

Test the Incident Reporting System Minimum Testable Product in Beta – November 10, 2023

Editors are invited to test an initial Minimum Testable Product (MTP) for the Incident Reporting System.

The Trust & Safety Product team has created a basic product version enabling a user to file a report from the talk page where an incident occurs.

Note: This product version is for learning about filing reports to a private email address (e.g., emergency![]() wikimedia.org or an Admin group). This doesn't cover all scenarios, like reporting to a public noticeboard.

wikimedia.org or an Admin group). This doesn't cover all scenarios, like reporting to a public noticeboard.

Your feedback is needed to determine if this starting approach is effective.

To test:

1. Visit any talk namespace page on Wikipedia in Beta that contains discussions. We have sample talk pages available at User talk:Testing and Talk:African Wild Dog you can use and log in.

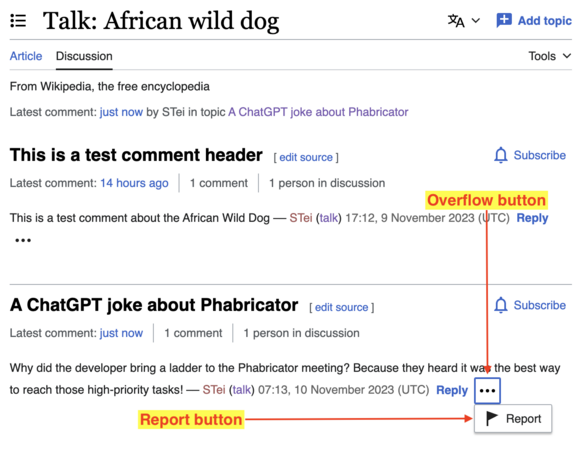

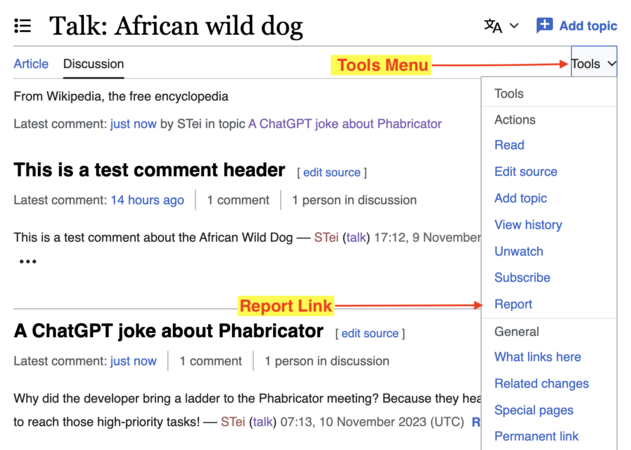

2. Next, click on the overflow button (vertical ellipsis) near the Reply link of any comment to open the overflow menu and click Report (see slide 1). You can also use the Report link in the Tools menu (see slide 2).

-

Slide 1

Slide 1 -

Slide 2

Slide 2

3. Proceed to file a report, fill the form and submit. An email will be sent to the Trust and Safety Product team, who will be the only ones to see your report. Please note this is a test and so do not use it to report real incidents.

4. As you test, ponder these questions:

- What do you think about this reporting process? Especially what you like/don’t like about it?

- If you are familiar with extensions, how would you feel about having this on your wiki as an extension?

- Which issues have we missed at this initial reporting stage?

5. Following your test, please leave your feedback on the talk page.

Troubleshooting If you can't find the overflow menu or Report links, or the form fails to submit, please ensure that:

If DiscussionTools doesn’t load, a report can be filed from the Tools menu. If you can't file a second report, please note that there is a limit of 1 report per day for non-confirmed users and 5 reports per day for autoconfirmed users. These requirements before testing help to reduce the possibility of malicious users abusing the system. |

更新 :分 享 事件 通報 研究 成果 – 2023年 9月 20日

The Incident Reporting System project has completed research about harassment on selected pilot wikis.

The research, which started in early 2023, studied the Indonesian and Korean Wikipedias to understand harassment, how harassment is reported and how responders to reports go about their work.

The findings of the studies have been published.

請參閱

事件 通報 專 案 的 四 項 更新 – 2023 年 7 月 27 日

Hello everyone! For the past couple of months the Trust and Safety Product team has been working on finalising Phase 1 of the Incident Reporting System project.

The purpose of this phase was to define possible product direction and scope of the project with your feedback. We now have a better understanding of what to do next. Read more.

项目范围&MVP – 2022年 11月8日

Our main goal for the past couple of months was to understand the problem space and user expectations around this project. The way we would like to approach this is to build something small, a minimum viable product (MVP), that will help us figure out whether the basic experience we are looking at actually works. Read more.

流 程

Figuring out how to manage incident reporting in the Wikimedia space is not an easy task. There are a lot of risks and a lot of unknowns.

As this is a complex project it needs to be split into multiple iterations and project phases. For each of these phases we will hold one or several cycles of discussions in order to ensure that we are on the right track and that we incorporate community feedback early, before jumping into large chunks of work.

第 一 阶段

Preliminary research: collect feedback, reading through existing documentation.

Conduct interviews in order to better understand the problem space and identify critical questions we need to answer.

Define and discuss possible product direction and scope of project. Identify possible pilot wikis.

At the end of this phase we should have a solid understanding of what we are trying to do.

第 二 阶段

Create prototypes to illustrate the ideas that came up in Phase 1.

Create a list of possible options for more in-depth consultation and review.

第 三 阶段

Identify and prioritize the best possible ideas.

Transition to software development and break down work in Phabricator tickets.

Continue cycle for next iterations

研究

2023年 9月 21日 更新 :分 享 事件 报告研究 成果

The Incident Reporting System project has completed research about harassment on selected pilot wikis.

The research, which started in early 2023, studied the Indonesian and Korean Wikipedias to understand harassment, how harassment is reported and how responders to reports go about their work.

The findings of the studies have been published.

In summary, we received valuable insights on the improvements needed for both onwiki and offwiki incident reporting. We also learned more about the communities' needs, which can be used as valuable input for the Incident Reporting tool.

We are keen to share these findings with you; the report has more comprehensive information.

Please leave any feedback and questions on the talkpage.

The following document is a completed review of research from 2015–2022 the Wikimedia Foundation has done on online harassment on Wikimedia projects. In this review we’ve identified major themes, insights, and areas of concern and provided direct links to the literature.

Previous work

The Trust and Safety Tools team has been studying previous research and community consultations to inform our work. We revisited the Community health initiative User reporting system proposal and the User reporting system consultation of 2019. We have also been trying to map out some of the conflict resolution flows across wikis to understand how communities are currently managing conflicts. Below is a map of the Italian Wiki conflict resolution flow. It has notes on opportunities for automation.

常見 問題

Q: Is there data available about how many incidents are reported per year?

A: Right now there is not a lot of clear data we can use. There are a couple of reasons for this. First, issues are reported in various ways and those differ from community to community. Capturing that data completely and cleanly is highly complicated and would be very time consuming. Second, the interpretation of issues also differs. Some things that are interpreted as harassment are just wiki business (e.g. deleting a promotional article). Review of harassment may also need cultural or community context. We cannot automate and visualize data or count it objectively. The incident reporting system is an opportunity to solve some of these data needs.

Q: How is harassment being defined?

A: Please see the definitions in the Universal Code of Conduct.

Q: How many staff and volunteers will be needed to support the IRS?

A: Currently the magnitude of the problem is not known. So the amount of people needed to support this is not known. Experimenting with the minimum viable product will provide some insight into the number of people needed to support the IRS.

Q: What is the purpose of the MVP (minimal viable product)?

A: The MVP is an experiment and opportunity to learn. This first experimental work will answer the questions that we have right now. Then results will guide the future plans.

Q: What questions are you trying to answer with the minimum viable product?

A: Here are the questions we need to answer:

- What kind of reports will people file?

- How many people will file reports?

- How many people would we need in order to process them?

- How big is this problem?

- Can we get a clearer picture of the magnitude of harassment issues? Can we get some data around the number of reports? Is harassment underreported or overreported?

- Are people currently not reporting harassment because it doesn’t happen or because they don’t know how?

- Will this be a lot to handle with our current setup, or not?

- How many are valid complaints compared to people who don't understand the wiki process? Can we distinguish/filter valid complaints, and filter invalid reports to save volunteer or staff time?

- Will we receive lots of reports filed by people who are upset that their edits were reverted or their page was deleted? What will we do with them?

Q: How does the Wikimedia movement compare to how other big platforms like Facebook/Reddit handle harassment?

A: While we do not have any identical online affinity groups, the Wikimedia movement is most often connected with Facebook and Reddit in regard to how we handle harassment. What is important to consider is nobody has resolved harassment. Other platforms struggle with content moderation, and often they have paid staff who try to deal with it. Two huge differences between us and Reddit and Facebook are the globally collaborative nature of our projects and how communities work to resolve harassment at the community-level.

Q: Is WMF trying to change existing community processes?

A: Our plan for the IRS is not to change any community process. The goal is to connect to existing processes. The ultimate goals are to:

- Make it easier for people who experience harassment to get help.

- Eliminate situations in which people do not report because they don’t know how to report harassment.

- Ensure harassment reports reach the right entities that handle them per local community processes.

- Ensure responders receive good reports and redirect unfounded complaints and issues to be handled elsewhere.